Watching a Train Wreck | University of Notre Dame

Though social media corporations court docket criticism with who they opt for to ban, tech ethics industry experts say the extra essential function these organizations control takes place powering the scenes in what they suggest.

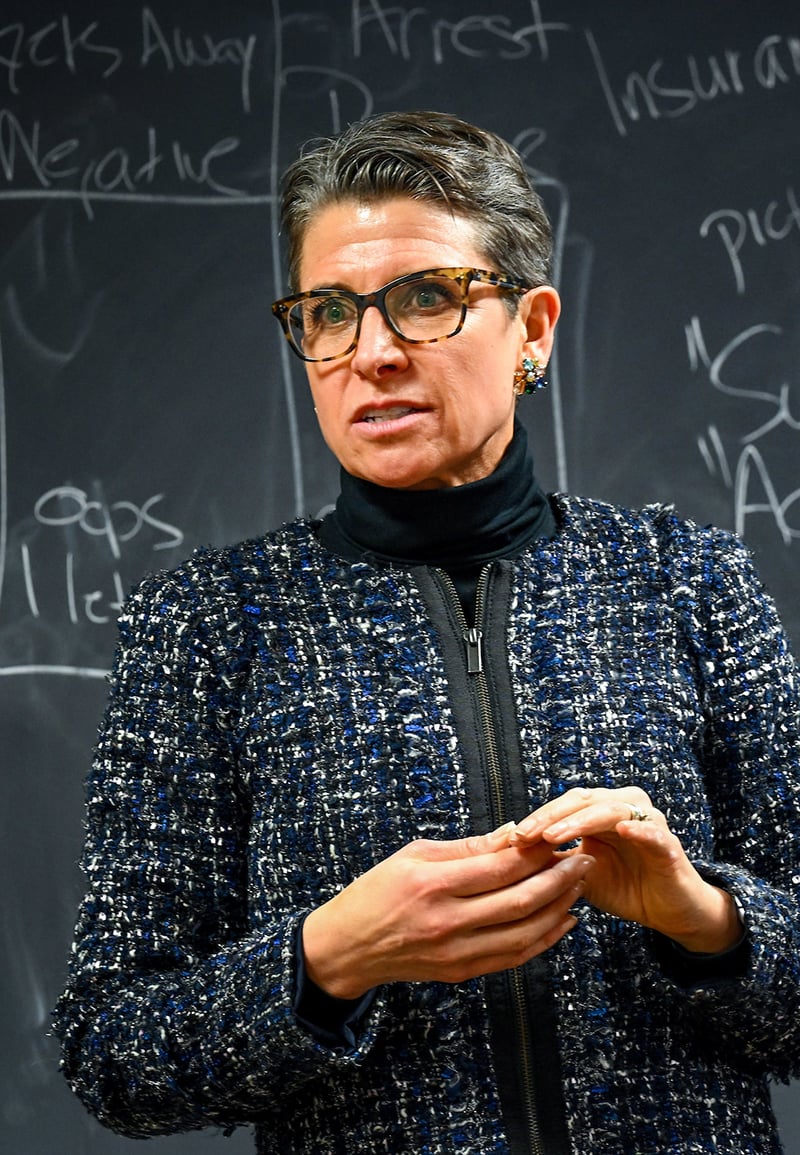

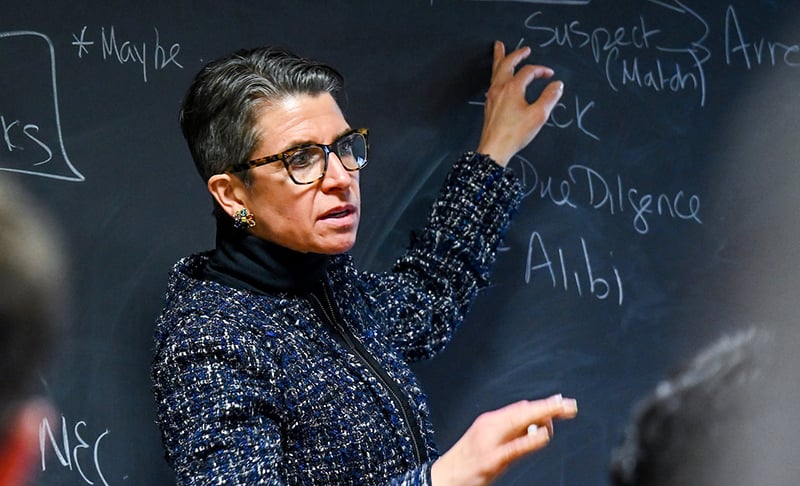

Kirsten Martin, director of the Notre Dame Technology Ethics Heart (ND TEC), argues that optimizing recommendations centered on a solitary variable — engagement — is an inherently value-laden determination.

Human nature might be fascinated by and drawn to the most polarizing information — we just cannot appear absent from a practice wreck. But there are continue to limitations. Social media platforms like Fb and Twitter regularly battle to come across the ideal balance among no cost speech and moderation, she says.

“There is a level in which people go away the platform,” Martin says. “Totally unmoderated articles, where you can say as terrible materials as you want, there’s a purpose why men and women do not flock to it. Because whilst it seems like a educate wreck when we see it, we never want to be inundated with it all the time. I believe there is a natural pushback.”

Elon Musk’s the latest adjustments at Twitter have reworked this discussion from an educational work out into a serious-time test situation. Musk may possibly have believed the dilemma of whether or not to ban Donald Trump was central, Martin claims. A one government can make a decision a ban, but deciding on what to advise normally takes know-how like algorithms and artificial intelligence — and folks to design and style and operate it.

“The thing which is distinct correct now with Twitter is obtaining rid of all the folks that actually did that,” Martin says. “The information moderation algorithm is only as very good as the individuals that labeled it. If you adjust the folks that are producing all those decisions or if you get rid of them, then your written content moderation algorithm is likely to go stale, and rather immediately.”

Martin, an specialist in privateness, engineering and business ethics and the William P. and Hazel B. White Center Professor of Engineering Ethics in the Mendoza School of Business, has intently analyzed written content marketing. Wary of criticism in excess of on the web misinformation prior to the 2016 presidential election, she says, social media firms put up new guardrails on what material and teams to propose in the runup to the 2020 election.

Facebook and Twitter were consciously proactive in information moderation but stopped right after the polls shut. Martin suggests Fb “thought the election was over” and knew its algorithms had been recommending detest teams but did not stop simply because “that kind of materials received so a great deal engagement.” With a lot more than 1 billion end users, the impact was profound.

Martin wrote an posting about this matter in a circumstance study textbook (“Ethics of Data and Analytics”) she edited, revealed in 2022. In “Recommending an Insurrection: Fb and Suggestion Algorithms,” she argues that Fb made mindful decisions to prioritize engagement simply because that was their selected metric for results.

“While the takedown of a one account may well make headlines, the refined advertising and suggestion of content material drove person engagement,” she wrote. “And, as Facebook and other platforms identified out, consumer engagement did not normally correspond with the greatest material.”

Facebook’s very own self-evaluation uncovered that its technology led to misinformation and radicalization. In April 2021, an inner report at Fb uncovered that “Facebook unsuccessful to quit an influential movement from employing its system to delegitimize the election, encourage violence, and aid incite the Capitol riot.”

A central problem is irrespective of whether the dilemma is the fault of the system or system users. Martin states this debate within the philosophy of technological know-how resembles the conflict about guns, where by some individuals blame the guns and many others the people who use them for harm.

“Either the technology is a neutral blank slate, or on the other end of the spectrum, know-how establishes every little thing and almost evolves on its have,” she states. “Either way, the enterprise that is both shepherding this deterministic technologies or blaming it on the end users, the enterprise that really styles it has essentially no duty by any means.

“That’s what I indicate by firms hiding powering this, just about indicating, ‘Both the method by which the selections are created and also the decision alone are so black boxed or quite neutral that I’m not dependable for any of its structure or final result.’”

Martin rejects equally statements.

An case in point that illustrates her conviction is Facebook’s advertising of tremendous buyers, people today who write-up content continuously. The firm amplified super users mainly because that drove engagement, even if these customers tended to include things like far more dislike speech. Believe Russian troll farms.

Computer engineers learned this pattern and proposed fixing it by tweaking the algorithm. Leaked documents have revealed that the company’s plan shop overruled the engineers mainly because they feared a hit on engagement. Also, they feared remaining accused of political bias for the reason that far-proper teams were frequently tremendous end users.

A different illustration in Martin’s textbook attributes an Amazon driver fired just after four many years of delivering offers around Phoenix. He obtained an automated electronic mail due to the fact the algorithms monitoring his overall performance “decided he wasn’t executing his career correctly.”

The company was knowledgeable that delegating the firing final decision to equipment could guide to mistakes and detrimental headlines, “but resolved it was much less expensive to believe in the algorithms than to pay back people today to examine mistaken firings so long as the motorists could be changed conveniently.” Martin alternatively argues that acknowledging the “value-laden biases of technology” is needed to preserve the capability of individuals to manage the style, growth and deployment of that engineering.